Information visualization and proxemics: Design opportunities and empirical findings

Mikkel R Jakobsen, Yonas Sahlemariam Haile, Søren Knudsen, and Kasper Hornbæk

Abstract

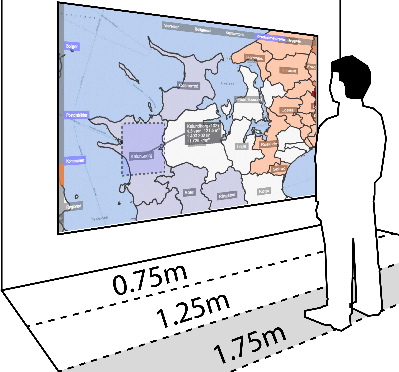

People typically interact with information visualizations using a mouse. Their physical movement, orientation, and distance to visualizations are rarely used as input. We explore how to use such spatial relations among people and visualizations (i.e., proxemics) to drive interaction with visualizations, focusing here on the spatial relations between a single user and visualizations on a large display. We implement interaction techniques that zoom and pan, query and relate, and adapt visualizations based on tracking of users’ position in relation to a large high-resolution display. Alternative prototypes are tested in three user studies and compared with baseline conditions that use a mouse. Our aim is to gain empirical data on the usefulness of a range of design possibilities and to generate more ideas. Among other things, the results show promise for changing zoom level or visual representation with the user’s physical distance to a large display. We discuss possible benefits and potential issues to avoid when designing information visualizations that use proxemics.

Cite as

Bibtex

@article{jakobsen2013information,

title = {Information visualization and proxemics: Design opportunities and empirical findings},

author = {Jakobsen, Mikkel R and Haile, Yonas Sahlemariam and Knudsen, S{\o}ren and Hornb{\ae}k, Kasper},

journal = {IEEE transactions on visualization and computer graphics},

volume = {19},

number = {12},

pages = {2386--2395},

year = {2013},

publisher = {IEEE}

}